ChatGPT has become the poster child for artificial intelligence and large language models everywhere. However, if you want something more specialized or need a solution that guarantees privacy, it isn’t your only option. I’ve been running a handful of AIs on my own PC for a year now instead of paying for ChatGPT—here’s how.

## Why Run an AI Locally on Your Own PC?

ChatGPT is responsive, relatively smart, and continuously receives updates, so why bother hosting your own large language model (LLM) at all? There are three big reasons: **integration with my projects, privacy, and specialization**.

### ChatGPT Costs Money to Use

If you’re self-hosting a smart home and want to integrate ChatGPT into your system, you’ll have to pay for access. Depending on usage, costs can range from a few cents per month to hundreds of dollars. Hosting your own AI doesn’t entirely solve this problem since you still pay for electricity, but it means you won’t unexpectedly face a cost hike or incur huge fees if you overuse the AI.

Even powerful home PCs would struggle to cost more than a few dollars per day in electricity—assuming they run at max capacity 24/7.

### Self-Hosted AIs Are Private

ChatGPT is a fantastic tool, but it isn’t private. If you’re concerned about how your data might be used or if you’re handling confidential information that cannot leave your organization, a local AI is a fantastic option.

By running an AI locally, you can ensure nothing leaves your PC. Provided your computer is secure, you can be confident that the data you provide won’t be used for future training or leak due to security vulnerabilities.

### Local AI Can Be Fine-Tuned to Your Needs

Not every AI or LLM functions the same. For example, asking the same question to Gemini or ChatGPT will yield slightly different answers. This variability exists in self-hosted AI as well.

Models like OpenAI’s gpt-oss provide different responses compared to Qwen3, and Gemma will offer varied answers from Kimi. These open models continue to evolve, and some excel at specific tasks over others—making it useful to quickly switch between models depending on your needs.

If you need complex feedback, larger models such as Qwen3 32B are ideal. For simpler tasks like parsing basic text, a lighter model like Gemma3 4B suffices. Delegating different jobs to the appropriate model helps save resources, especially in a homelab environment.

Beyond language, you can also attach other AI tools specialized in machine vision or natural language processing for more complex jobs.

## What Do You Need to Host Your Own ChatGPT?

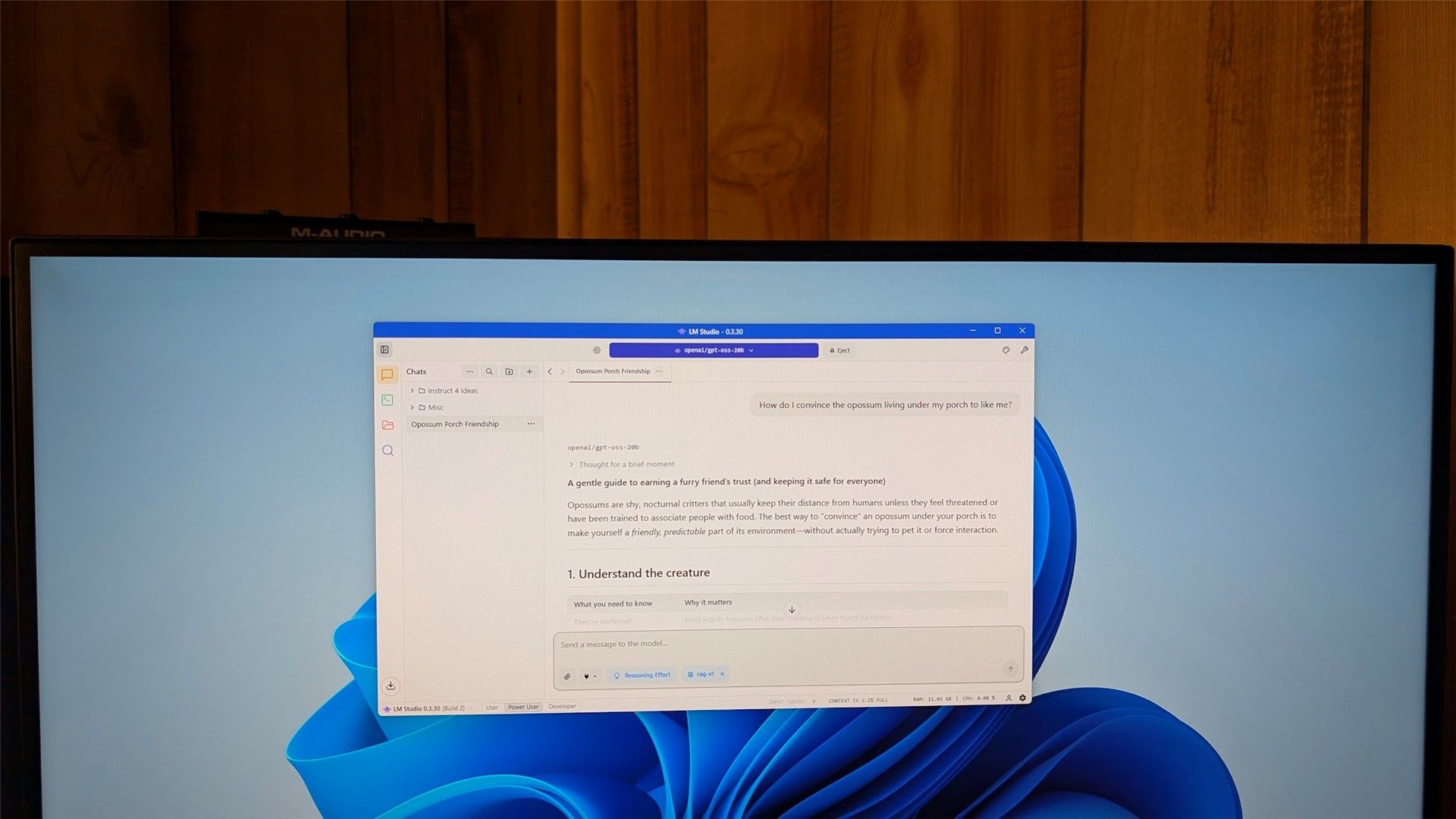

The key software is **LM Studio**, which provides a user-friendly interface to chat with local LLMs, similar to how you interact with ChatGPT. It also makes trying out new models easy.

### Hardware Requirements

– **GPU VRAM:** The biggest limiting factor is your GPU’s VRAM.

– **16 GB VRAM** is a good middle ground to run a wide variety of capable models.

– **12 GB VRAM** is the bare minimum.

– **Storage:** A fast SSD improves model loading/unloading times.

– **System RAM:** 32 GB or more is ideal if you want to offload some AI tasks from GPU to CPU.

Most modern gaming PCs can handle at least some models without issue.

If you’re unsure which models your system can run, there’s a handy GitHub project that recommends models based on your hardware specs and use cases.

## Running Your Own ChatGPT

Once you download and install LM Studio, launching a model is easy. Simply:

1. Click the magnifying glass icon.

2. Browse the model you want.

3. Click **Download**.

If you have a model from another source, place it in the following folder to make it appear in LM Studio’s model list:

“`

C:\Users\(YOURUSERNAME)\.lmstudio\models

“`

Replace `(YOURUSERNAME)` with your account name. For example:

“`

C:\Users\Equinox\.lmstudio\models

“`

## What Can Your Own ChatGPT Do?

What your privately hosted LLM can accomplish depends on your chosen model, hardware, and prompt-writing skills. There are dozens of models with specialized functions, but most can at least:

– Read and summarize source documents

– Discuss content you provide

– Parse images or videos

Many models are optimized for tool use, enabling them to interact with external apps, perform tasks automatically, or fetch live information.

With some experimentation, you can even integrate local LLMs with platforms like Home Assistant to build your own talking, thinking (sort of) smart home.

## Beyond Cost Savings: It’s Just Fun

Aside from the obvious benefits like cost savings, privacy, and customization, hosting your own LLM is simply enjoyable. It isn’t every day that groundbreaking new technology becomes widely accessible to home users—especially one as potentially disruptive as local large language models.

If you’re curious and willing to experiment, jumping into self-hosted AI can open up a new world of possibilities.

—

Whether you’re driven by privacy concerns, integration needs, or just a desire to tinker, running your own AI locally is an exciting and rewarding endeavor. Give it a try—you might just find it’s the perfect complement or alternative to services like ChatGPT.

https://www.howtogeek.com/i-self-host-my-own-private-chatgpt-with-this-tool/